Coursera 강의 “Machine Learning with TensorFlow on Google Cloud Platform” 중 세 번째 코스인 Intro to TensorFlow의 1주차 강의노트입니다.

What is TensorFlow?

- TensorFlow is an open-source high-performance library for

numerical computationthat usesdirected graphs. - The

nodesrepresent mathematical operations.(ex. add) - The

edgesrepresent the input and output of mathematical operations.

Benefits of a Directed Graph

- Directed acyclic graph(DAG) is a

language-independentrepresentation of the code in model. - This makes graphs

being portablebetween different devices. - TensorFlow can

insert send and receive nodesto distribute the graph across machines. - TensorFlow can optimize the graph by

merging successive nodeswhere necessary. - TensorFlow Lite provides on-device inference of ML models on mobile devices and is available for a variety of hardware.

- TensorFlow supports

federatedlearning.

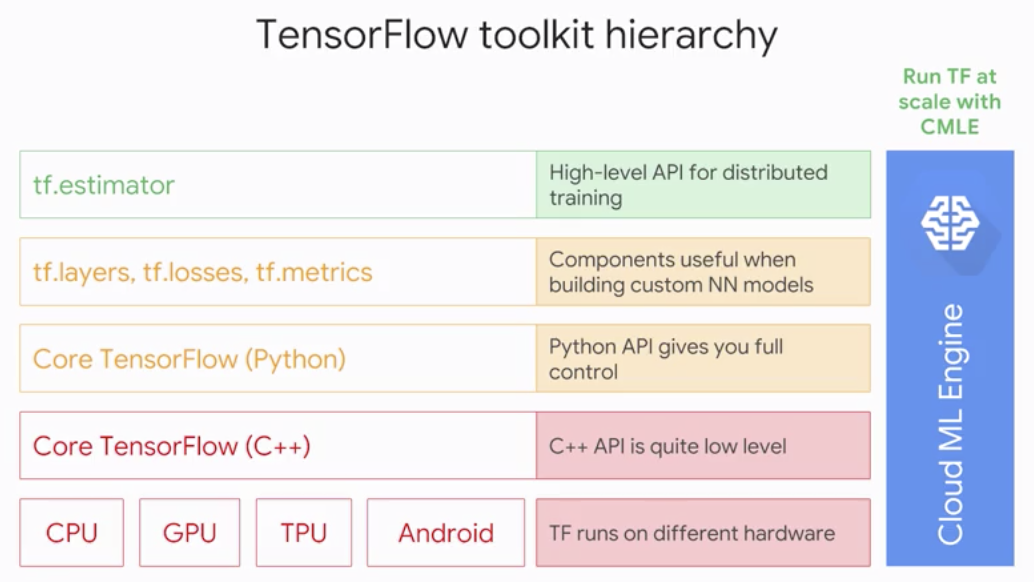

TensorFlow API Hierarchy

TensorFlow tolkit hierarchy

- The lowest level is a layer that’s implemented to target different hardware platforms.

- The next level is a TensorFlow C++ API.

- The core Python API is what contains much of the

numeric processing code. - Set of Python modules that have

high level representationof useful NN components. (good for custom model) Estimatorknows how to training, evaluate, create a check point, save and serve model.

Lazy Evaluation

- The Python API lets you

build and runDirected Graphs - Create the Graph (Build)

...

c = tf.add(a,b)- Run the Graph (Run)

session = tf.Session()

numpy_c = session.run(c, feed_dict=...)- The graph definition is separate from the training loop because this is a lazy evaluation model. (need to run the graph to get results)

tf.eager, however, allows to execute operations imperatively.

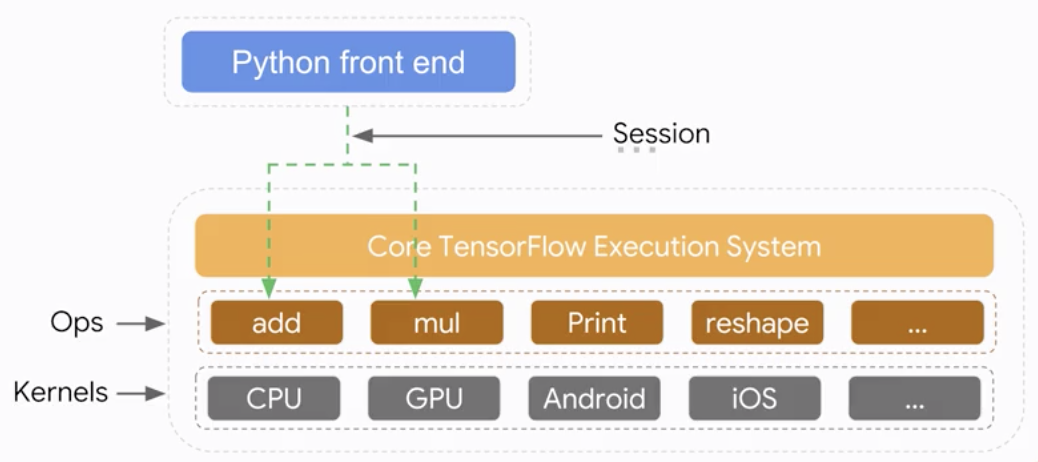

Graph and Session

Graphscan be processed, compiled, remotely executed, and assigned to devices.- The

edgesrepresent data astensorwhich are n-dimensional arrays. - The

nodesrepresent TensorFlowoperationson those tensors. Sessionallows TensorFlow tocache and distributecomputation.

Session

- Execute TensorFlow graphs by calling

run()on atf.Session

Evaluating a Tensor

- It is possible to

evaluatea list of tensors. - TensorFlow in

Eager modemakes it easier to try out things, but is not recommended for production code.

import tensorflow as tf

from tensorflow.contrib.eager.python import tfe

tfe.enable_eager_execution() # Call exactly once

x = tf.constant([3, 5, 7])

y = tf.constant([1, 2, 3])

print(x-y)

# OUTPUT:

# tf.Tensor([2 3 4], shape=(3,), dtype=int32)Visualizing a graph

- You can write the graph out using

tf.summary.FileWriter

import tensorflow as tf

x = tf.constant([3, 5, 7], name="x") # Name the tensors and the operations

y = tf.constant([1, 2, 3], name="y")

z1 = tf.add(x, y, name="z1")

z2 = x + y

z3 = z2 - z1

with tf.Session() as sess:

# Write the session graph to summary directory

with tf.summary.FileWriter('summaries', sess.graph) as writer:

a1, a3 = sess.run([z1, z3])Then,

!ls summaries

event.out.tfevents.1517032067.e7cbb0325e48It’s not human-readable.

- The graph can be visualized in

TensorBoard.

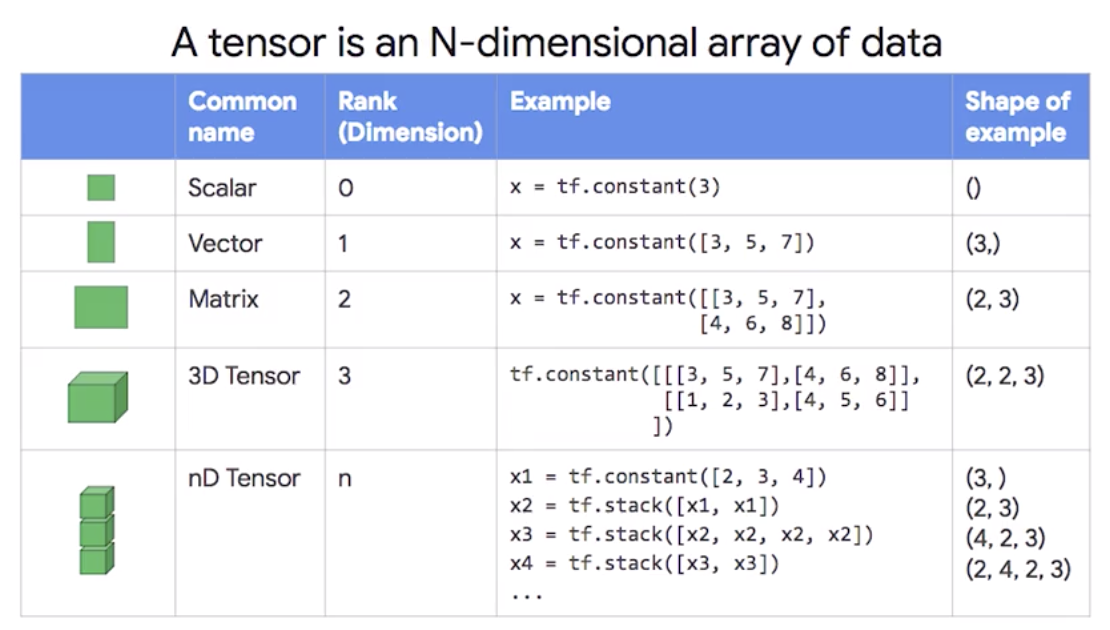

Tensors

- A tensor is an N-dimensional array of data.

what is tensor

- Tensors can be

sliced

import tensorflow as tf

x = tf.constant([3, 5, 7], [4, 6, 8])

y = x[:. 1]

with tf.Session() as sess:

print y.eval()

# OUTPUT:

# [5 6]- Tensors can be

reshaped

import tensorflow as tf

x = tf.constant([[3, 5, 7], [4, 6, 8]])

y = tf.reshape(x, [3, 2])

with tf.Session() as sess:

print y.eval()

# OUTPUT:

# [[3 5]

# [7 4]

# [6 8]import tensorflow as tf

x = tf.constant([[3, 5, 7], [4, 6, 8]])

y = tf.reshape(x, [3, 2])[1, :]

with tf.Session() as sess:

print y.eval()

# OUTPUT:

# [7 4]Variables

- A variable is a tensor whose value is

initializedand then typicallychangedas the program runs.

def forward_pass(w, x):

return tf.matmul(w, x)

def train_loop(x, niter=5):

# Create variable, specifying how to init and whether it can be tuned

with tf.variable_scope("model", reuse=tf.AUTO_REUSE):

w = tf.get_variable("weights",

shape=(1, 2), # 1 x 2 matrix

initializer=tf.truncated_normal_initializer(),

trainable=True)

# "Training loop" of 5 updates to weights

preds = []

for k in xrange(niter):

preds.append(forward_pass(w, x))

w = w + 0.1 # "Gradient Update"

return predstf.get_variablecan be helpful to be able to reuse variables or create them afresh depending on different situations.

with tf.Session() as sess:

# Multiplying [1,2] x [2,3] yields a [1,3] matrix

preds = train_loop(tf.constant([[3.2, 5.1, 7.2],[4.3, 6.2, 8.3]])) # 2 x 3 matrix

# Initialize all variables

tf.global_variables_initializer().run()

for i in xrange(len(preds)):

print "{}:{}".format(i, preds[i].eval())

# OUTPUT:

# 0:[[-0.5322 -1.408 -2.3759]]

# 1:[[0.2177 -0.2780 -0.8259]]

# 2:[[0.9677 0.8519 0.724]]

# 3:[[1.7177 1.9769 2.2747]]

# 4:[[2.4677 3.1155 3.8245]]- To summarize,

- create a variable by calling

get_variable - decide on how to

initializea variable - use the

variablejust like any other tensor when building the graph - In session,

initializethe variable - evaluate any tensor that you want to evaluate

- create a variable by calling

Placeholdersallow you to feed in values, such as by reading from a text file

import tensorflow as tf

a = tf.placeholder("float", None)

b = a * 4

print a

with tf.Session() as sess:

print(sess.run(b, feed_dict={a: [1,2,3]}))

# OUTPUT:

# Tensor("Placeholder:0", dtype=float32)

# [4 8 12]Debugging TensorFlow programs

- Debugging TensorFlow programs is

similarto debugging any piece of software- Read error messages to

understand the problem Isolatethe method with fake data- Send made-up data into the method with fake data

- Know how to solve common problems

- Read error messages to

- The most common problem tends to be

tensor shape- Tensor shape

- Scalar-vector mismatch

- Data type mismatch

- Shape problems also happen because of

batch sizeor because you have a scalar when a vector is needed (or vice versa) - Shape problems can often be fixed using

- tf.reshape()

- tf.expand_dims()

- tf.slice()

- tf.squeeze()

tf.expand_dimsinserts a dimension of 1 into a tensor’s shape

x = tf.constant([[3. 2], [4, 5], [6, 7]])

print "x.shape", x.shape

expanded = tf.expand_dims(x, 1)

print("expanded.shape", expanded.shape)

with tf.Session() as sess:

print("expanded:\n":, expanded.eval())

# OUTPUT:

# x.shape (3, 2)

# expanded.shape (3, 1, 2)

# expanded:

# [[[3 2]]

# [[4 5]]

# [[6 7]]]tf.sliceextracts a slice from a tensor

x = tf.constant([[3. 2], [4, 5], [6, 7]])

print "x.shape", x.shape

sliced = tf.slice(x, [0, 1], [2, 1])

print("sliced.shape", sliced.shape)

with tf.Session() as sess:

print("sliced:\n:", sliced.eval())

# OUTPUT:

# x.shape (3, 2)

# sliced.shape (2, 1)

# sliced:

# [[2]

# [5]]tf.squeezeremoves dimensions of size 1 from the shape of a tensor

t = tf.constant([[[1],[2],[3],[4]],[[5],[6],[7],[8]]])

with tf.Session() as sess:

print("t")

print(sess.run(t))

print("t squeezed")

print(sess.run(tf.squeeze(t)))

# OUTPUT:

# t

# [[[1]

# [2]

# [3]

# [4]]

#

# [[5]

# [6]

# [7]

# [8]]]

# t squeezed

# [[1 2 3 4]

# [5 6 7 8]]- Another common problem is

data type- The reason is because we are

mixing types.(ex. Adding a tensor of floats to a tensor of ints won’t work) - One solution is to do a cast with

tf.cast().

- The reason is because we are

- To debug full-blown programs. there are three methods

tf.Print()tfdbgTensorBoard

- Change logging level from

WARN

tf.logging.set_verbosity(tf.logging.INFO)tf.Print()can be used to log specific tensor values

def some_method(a, b):

b = tf.cast(b, tf.float32)

s = (a / b) # oops! NaN

print_ab = tf.Print(s, [a, b])

s = tf.where(tf.is_nan(s), tf.transpose(s)))

return tf.sqrt(tf.matmul(s, tf.transpose(s)))

with tf.Session() as sess:

fake_a = tf.constant([[5.0, 3.0, 7.1], [2.3, 4.1, 4.8]])

fake_b = tf.constant([[2, 0, 5], [2, 8, 7]])

print(sess.run(some_method(fake_a, fake_b))%bash

python xyz.py

Output:

[[ nan nan][ nan 1.43365264]]- TensorFlow has a dynamic, interactive debugger (

tfdbg)

import tensorflow as tf

from tensorflow.python impoty debug as tf_debug

def some_method(a, b):

b = tf.cast(b, tf.float32)

s = (a / b) # oops! NaN

return tf.sqrt(tf.matmul(s, tf.transpose(s)))

with tf.Session() as sess:

fake_a = tf.constant([[5.0, 3.0, 7.1], [2.3, 4.1, 4.8]])

fake_b = tf.constant([[2, 0, 5], [2, 8, 7]])

sess = tf.debug.LocalCLIDegubWrapperSession(sess)

sess.add_tensor_filter("has_inf_or_nan", tf_debug.has_inf_or_nan)

print sess.run(some_method(fake_a, fake_b)

# in a Terminal window

# python xyz.py --debug